What is Lovable

A brief introduction to Lovable: it is one of the top new AI tools used to create apps and websites by chatting with AI, creating entire web apps from text prompts.

It is one of the fastest growing companies ever, reaching the unicorn status in just a few months of its release.

I'd highly suggest anyone thinking about prototyping an app to try the Lovable tool.

What did I use it for

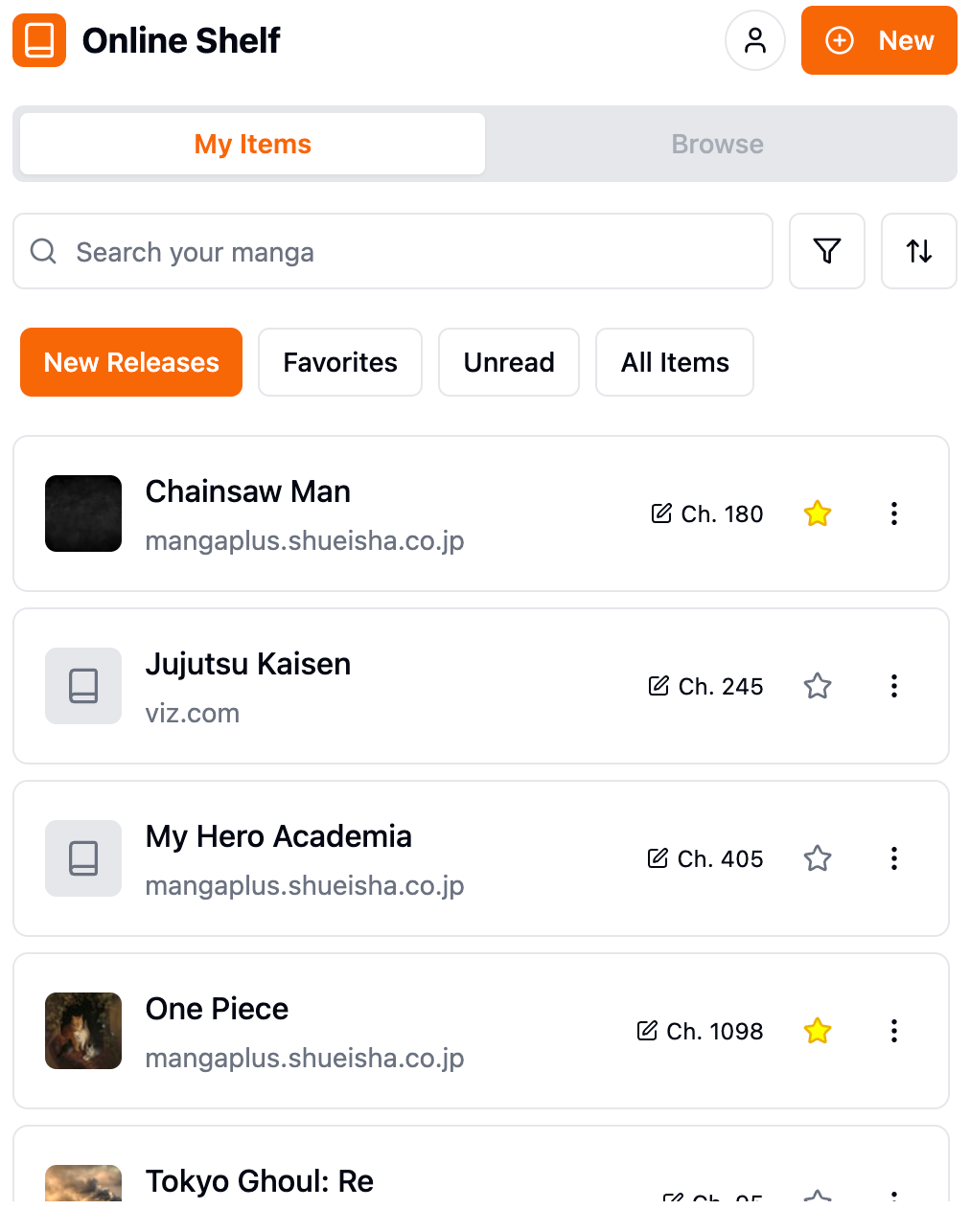

As a nerd, my objetive was to build a chrome extension that would help me keep track of the dozens of comics I read online. I already had a Notion database to help me do it, but it is a cumbersome process, mainly because I had to: open it, wait for it to load, find the comic information I want to update and type in the next value. I wanted to streamline the process to fewer clicks, better responsiveness and implement a few cool features, like getting a notification when a new chapter should already be released.

Working with Lovable

I now understand why Lovable grew so much in such a short span of time. Lovable is an amazing tool for non-developers and it is extremely useful for getting your web app up and running, even for developers, as it also makes UX/UI decisions which developers (specially backend ones) struggle with. Most startups, and even company internal tools which usually don't get much love (meaning priority/time/cash) will now probably be built using tools like Lovable.

Using it to build my chrome extension was amazing. With a single prompt it got much of the base of the extension. It easily got at least 95% of what I meant in my prompts.

That said, for now the tool is only useful at the start of a project (and I can't emphasize enough how useful it is), and cannot be used reliable to assist developers in the long run. I also don’t see it being used in an existing code base.

For starters, it doesn’t have a good experience for common developer practices:

It doesn’t have branches, so all Lovable changes are applied directly to production

Since it doesn't use branches, it also doesn’t open an MR (merge request) to be reviewed before applying changes (but you can latter see a diff of the applied commit in GitHub/in your local environment)

Additionally, like most of these coding tools I've seen that are aware of the whole code base, it is prone to unnecessary refactors and touching things that are not related to the current prompt. Like any unnecessary refactor, they clog the git history and make it harder to review changes, but they are even worse when reviewing changes to the UI that shouldn't have happened. If it did open MRs (for those who want it), it would be easier to undo these kind of changes. There are even some unnecessary changes that could be preemptively removed without the use of AI, like randomly changing the order of imports, which happened a lot.

This also clashes with the current way pricing is setup. Currently payment is setup as “pay-per-prompt”1, so it is better for your wallet to give Lovable longer prompts with multiple requests all at once, but this also means a lot of changes in a single run, and each run generates a single commit, which is really bad if you want to rollback just a single change.

In my opinion, one of the next big features for this kind of tool should be having a way to preemptively limit which files should be changed, and/or a more non-tech friendly way to select which components should be targeted. It could also be a “lock” feature, which makes so the code generator is not allowed to change certain parts of the code/UI until “unlocked”.

There could also be extra guardrails to the output, like “Are the changes being made relevant?” or “Can I make the code changes smaller?”, and maybe they are already in place, but they are not as effective as I wish them to be. Which begs the question: “how much effort and time (and in other words ‘money’) should be spent on a single prompt?”. This brings us back to the cost issue, as adding extra review steps before the output would definitely increases costs, and it doesn't make sense in a single pay-per-prompt model, which is why I think it is imperative to have better pricing models to have better tools.

We are currently in a world that expects everything fast. But how fast does it actually need to be? Increasing correctness while increasing result time may be a good trade-off for a lot of cases (maybe not all), specially after the first few rounds of iterations where unwanted changes start to become costlier. It also doesn't make sense to respond as soon as possible if you take 30 minutes to an hour planning your next feature request and writing your next prompt.

Here are a few tips after having worked with Lovable for a few days:

Tips

To make better use of your money, use longer prompts. The free tier for Lovable of 5 prompts a day is actually quite generous if you actually plan before prompting, as a good prompt is really similar to a task description in Jira/Trello, which as any product manager would tell you, takes a lot of time to write. If you use prompts as a back and forth agent you’ll easily burn through your credits with little gain after each prompt;

If you are using the free tier with 5 prompts a day, plan that you'll need to use 1 or 2 prompts to correct errors or unwanted changes;

I’ve learned the hard way to avoid prompts with things like “lets break it step by step”, or “apply one change at a time” in my large prompts as this may actually make Lovable apply only the first request in the prompt;

Prompting Lovable to avoid unnecessary refactors didn't seem to have much of an effect. I'm not sure the best way to deal with this for now;

Reading the code really helps with prompts, as prompting which classes and functions you want changed helps Lovable make the correct changes;

Love the project, don't love the code. Lovable will definitely touch code you don't want to be touched. Refactor the code to your taste only after you are done with Lovable;

Don't expect perfection for things that rely on newer documentation or exact code, like API integrations. In my case I wanted to use Notion as my extension backup, which I had to manually implement after I wasn't successful after a few prompts as the Notion API is relative new and with limited documentation;

It is possible to use Lovable to guide and explain parts of the code for you2, but given the pricing model, you are probably better off using other AI tools directly in your IDE.

Extra - The power and responsibility of AI

This experience consolidated something in my mind: whoever controls the model controls the truth.

The AI is (and needs to be) highly optioned about which tools and frameworks it should use, otherwise nothing would get done.

Lovable decided, without asking me, getting my approval or even telling me that it would use React, tailwind, lucide, among other things in my project. This seems harmless as it is choosing free open source libraries, but imagine a scenario where I ask Lovable or another AI tool to give me metrics about usage in my backend service and it decides to use Datadog or New Relic as the main monitoring tool. This may make it even harder for new companies to grow a business.

This means AI will be a huge booster to products able to influence the models, just like in a search engine, but probably in an order of magnitude larger since users won't even be made aware of the choices being made and about other options.

Final Thoughts

Regardless of any of the current shortcomings I’ve listed here, I think Lovable is an amazing tool to prototype your product with, and it will only get better with time.

I'm not part of Lovable’s team, so I'm not sure about their business strategy. They may never consider developers their main clients, and they may not need to, focusing more on non-technical people, so there may never be these nice to have features for developers on Lovable. But there are other companies working on better developer tools, like the claude-code cli, which I'll address in a future post.

The pay-for-prompt/token has always been a strange business model for me. This business model incentivizes small output mistakes to make the user spend more on the platform. I know most people working there are probably trying to build a great product, and there is a threshold where people will stop using the product if it is too bad, but this means there will probably be a sweet spot between cost and revenue for how good the tool should be. The only thing I think can be done about this, which I think should be the future, is having models running locally or make it more like AWS EC2, so the price is not attached to the amount of tokens/user prompts, but I'm not sure how feasible this is for now. Pay-for-prompt/token will probably be the norm until a big player wants to change this.

It doesn’t have to be Lovable since this is not the core of their business, but any tool able to use the whole codebase as an input can be an amazing learning tool for new developers in an organization.